Welcome to the future we deserve.

Technicians at McAfee, Inc. wanted to test out exactly how well Tesla’s Autopilot system worked. So they decided to take a strip of electrical tape and put it across the middle of the “3” in a “35 mile per hour” speed limit sign, tricking the car into thinking the sign said “85 miles per hour”.

The test concludes 18 months of research, according to Bloomberg

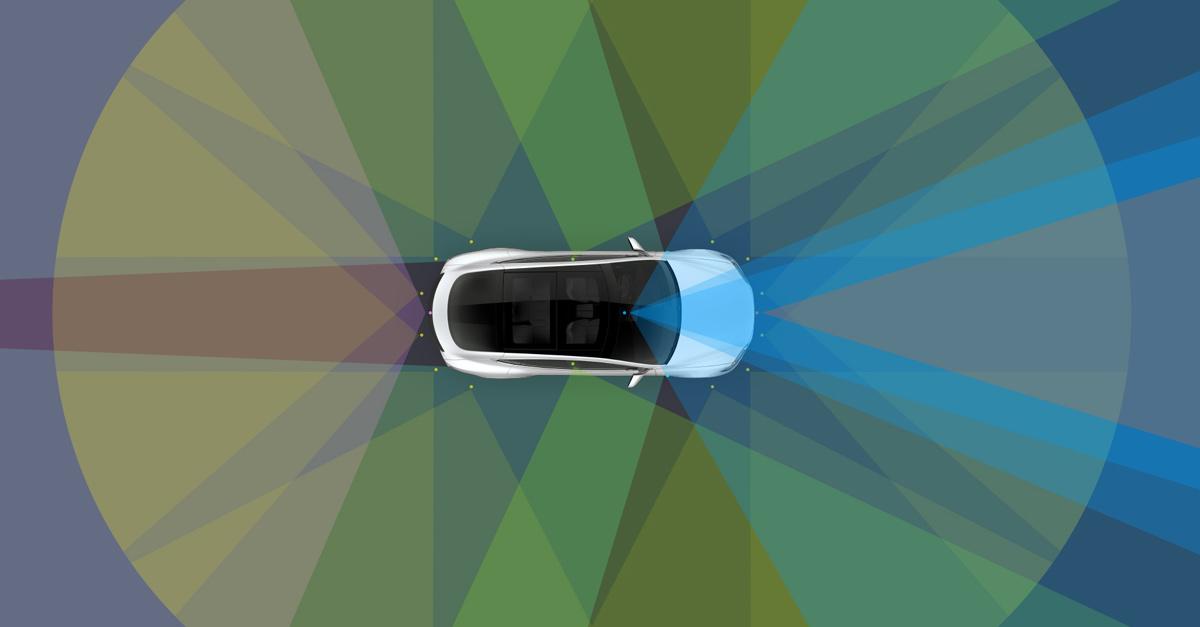

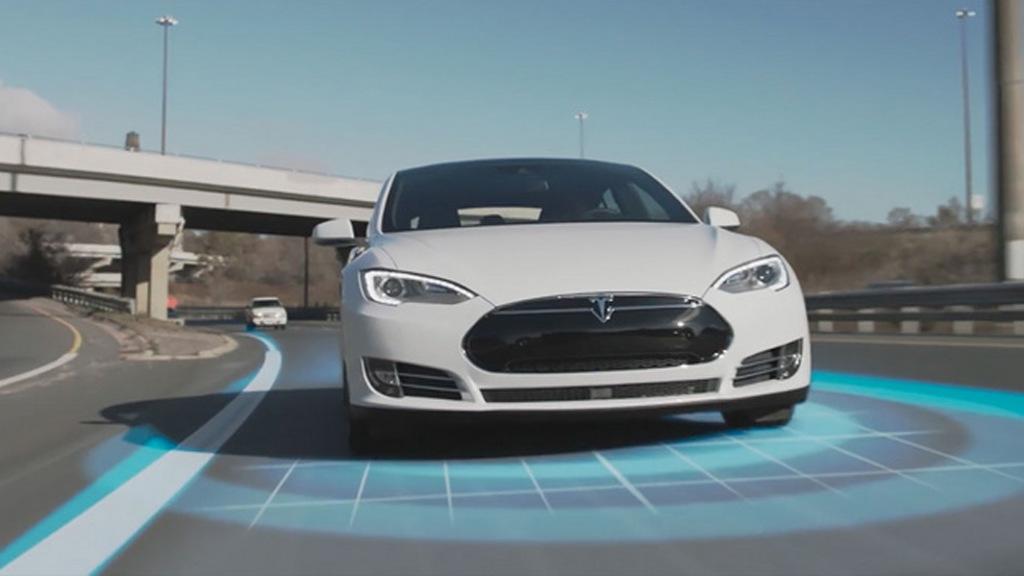

For the test, McAfee’s researchers used a 2016 Model S and Model X that had camera systems supplied by Mobileye under Tesla’s old agreement with the company that ended in 2016. Tests performed on Mobileye’s newest camera system didn’t reveal the same vulnerabilities.

Mobileye defended their technology in a statement to Bloomberg, claiming humans could have also been fooled by the same type of sign modification.

“Autonomous vehicle technology will not rely on sensing alone, but will also be supported by various other technologies and data, such as crowd sourced mapping, to ensure the reliability of the information received from the camera sensor and offer more robust redundancies and safety,” Mobileye said.

Also according to McAfee technicians, Teslas don’t rely on traffic sign recognition.

Povolny commented:

“Manufacturers and vendors are aware of the problem and they’re learning from the problem. But it doesn’t change the fact that there are a lot of blind spots in this industry.”

The real-world threats of something similar happening are relatively low. Self-driving cars remain in development stage and are mostly being tested with safety drivers behind the wheel. That is, of course, unless you’re one of the “lucky” beta testers driving around with your Tesla on Autopilot.

McAfee’s researchers say they were only able to trick the system by duplicating a “sequence involving when a driver-assist function was turned on and encountered the altered speed limit sign.”

“It’s quite improbable that we’ll ever see this in the wild or that attackers will try to leverage this until we have truly autonomous vehicles, and by that point we hope that these kinds of flaws are addressed earlier on,” Povolny concluded.

The weakness isn’t just specific to Tesla or Mobileye technology: it’s inherent in all self-driving systems.

Missy Cummings, a Duke University robotics professor and autonomous vehicle expert, summed it up: “And that’s why it’s so dangerous, because you don’t have to access the system to hack it, you just have to access the world that we’re in.”